Sanjay Rathee, Arti Kashyap

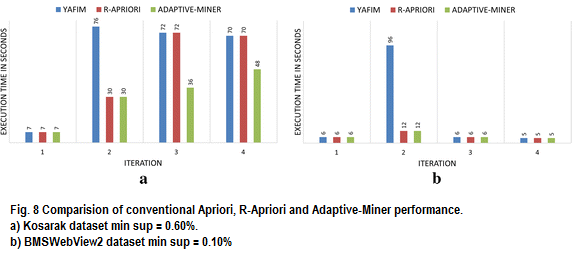

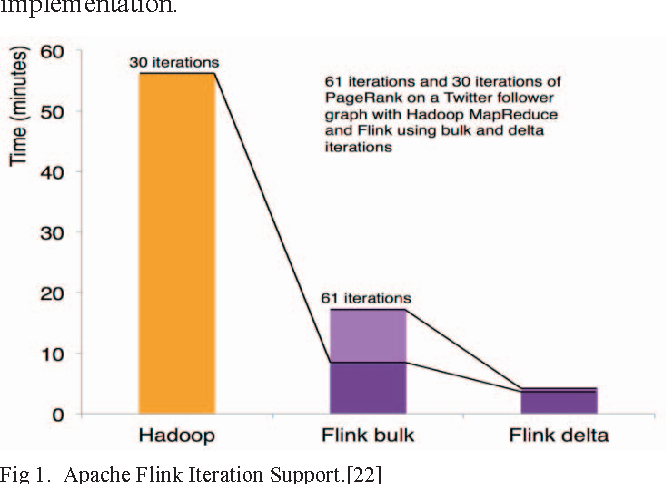

Extraction of useful information from large datasets is one of the most important research problem. Association rule mining is one of the best methods for this purpose. Finding possible associations between items in large transaction based datasets (finding frequent patterns) is most important part of the association rule mining. There exists many algorithms to find frequent patterns but Apriori algorithm always remains a preferred choice due to its ease of implementation and natural tendency to be parallelized. Many single-machine based Apriori variants exist but massive amount of data available these days is above capacity of a single machine.Therefore, to meet the demands of this ever-growing huge data, there is a need of multiple machines based Apriori algorithm.For these type of distributed applications, mapreduce is a popular fault-tolerant framework. Hadoop is one of the best open-source software framework with mapreduce approach for distributed storage and distributed processing of huge datasets using clusters built from commodity hardware.